The Pitfalls of Writing with AI

- Yves Peirsman

- Llms , Creative writing , Risks

- November 27, 2024

As generative AI becomes more widespread, its potential dangers are becoming increasingly apparent. While it’s well-known that Large Language Models sometimes make things up — a phenomenon that some call hallucinations and others bullshit — other more subtle risks are often overlooked. When you use AI in your work or daily life, it’s crucial to understand these hidden dangers.

Danger 1: Lower Creativity

There’s no question AI can make people more creative. Unfortunately this outcome is far from guaranteed. McGuire et al. 2024 studied the creative benefits of using a state-of-the-art poetry generation model and found that one deciding factor is the design of the generative AI system. In their experiments, professional poets judged the creativity of poems produced under three conditions: written solely by humans, created by AI and edited by humans, and written in close collaboration between people and AI.

Their first experiment revealed that poems written exclusively by humans were more creative than AI poems that had been edited by humans. In this role of editor, participants proved too reluctant to alter the generated content drastically. However, a follow-up experiment showed that the difference in creativity disappeared when humans and AI co-created poems. In this setup, participants were much more actively engaged in the writing process: they were able to select the topic of the poem and alternated writing lines with AI. In other words, when they work with generative AI in creative tasks, it’s crucial people act as active co-creators rather than more passive editors.

Danger 2: Lower Collective Novelty

But even when AI makes people more creative, it may come with risks. Doshi and Hauser 2024 asked almost 300 people to write an eight-sentence young adult story. They assigned their participants to three groups: one wrote without AI, a second group received one story idea from an AI model, and a third had access to a maximum of 5 generated AI ideas. Six hundred readers then evaluated the stories on novelty and usefulness. In order to be useful, a story had to be appropriate for the audience and of sufficient quality to be developed into a complete book and accepted by a publisher.

The experiment showed that AI assistance improved both the novelty and usefulness of stories. Participants who had access to 5 AI suggestions benefited more than those with access to just one AI idea. While AI had no effect on the performance of the most creative writers, it effectively boosted the scores of less creative writers to the same level. However, there was a catch: AI-assisted stories tended to be more similar to each other than those written without AI. This presents authors with a real dilemma: AI can make them more creative, but it could make them lose some of their individuality. And if AI assistance becomes widespread among publishers and self-publishers, we could see a reduction in overall originality across the industry.

it increases the stories' similarity to each other (right: grey = no AI assistance, blue = maximum 1 AI suggestion, red = maximum 5 AI suggestions)

Danger 3: Poorer Learning

Other warning signs come from fields beyond creative writing. One example is education, where the usage of generative AI is perhaps most controversial. Bastani et al. 2024 showed that, when it is not used correctly, generative AI can harm learning. They conducted an experiment in a Turkish high school where three groups of students had to solve a series of math problems. One group worked without AI assistance, a second used a basic AI assistant similar to ChatGPT and a third used a more specialized AI system called GPT Tutor. During the practice sessions, AI assistance significantly improved performance: students using the basic assistant scored 48% better and those using GPT Tutor scored a whopping 127% higher on the exercises than the non-assisted control group.

However, when all students took a final mathematics test without support, the groups that had previously received help from AI did not perform any better than the control group. In fact, the students who had used the basic AI assistant scored 17% lower than those who had practised without AI. Careful analysis of the results showed that students with access to the basic AI assistant mainly used it to ask for the solution to an exercise, without trying to understand the problem. While the basic assistant was happy to oblige, the more advanced GPT Tutor was set up to offer hints rather than direct answers. This better design avoided the negative effects, but it did not lead to higher scores on the final exam either.

Danger 4: Inherited Biases

Another risk to learning is that AI models are often prone to systematic errors. When they are trained on data that contains unwanted biases, for example a preference for male job candidates, models will often replicate this behavior. A recent study by Lucía Vicente and Helena Matute highlights the risk of such AI biases when people work together with an AI assistant. In their experiments, students were tasked with classifying an image of a fictitious tissue sample as either diseased or healthy. The rule was straightforward: if the image contained more dark cells than light ones, the tissue was assumed to be diseased.

The researchers divided the students into two groups. One group worked with a biased AI system that consistently misclassified samples with only 40% light cells as diseased, the other group worked independently. In a first phase, the students assisted by the AI system made significantly more misclassifications of the 40% light tissue samples than the unassisted group. In a second phase, both groups continued their work without AI assistance. Remarkably, researchers found the bias persisted in the group that had previously been assisted by AI, albeit to a lesser extent. This demonstrates that people exposed to AI biases can internalize them and carry them into their independent work.

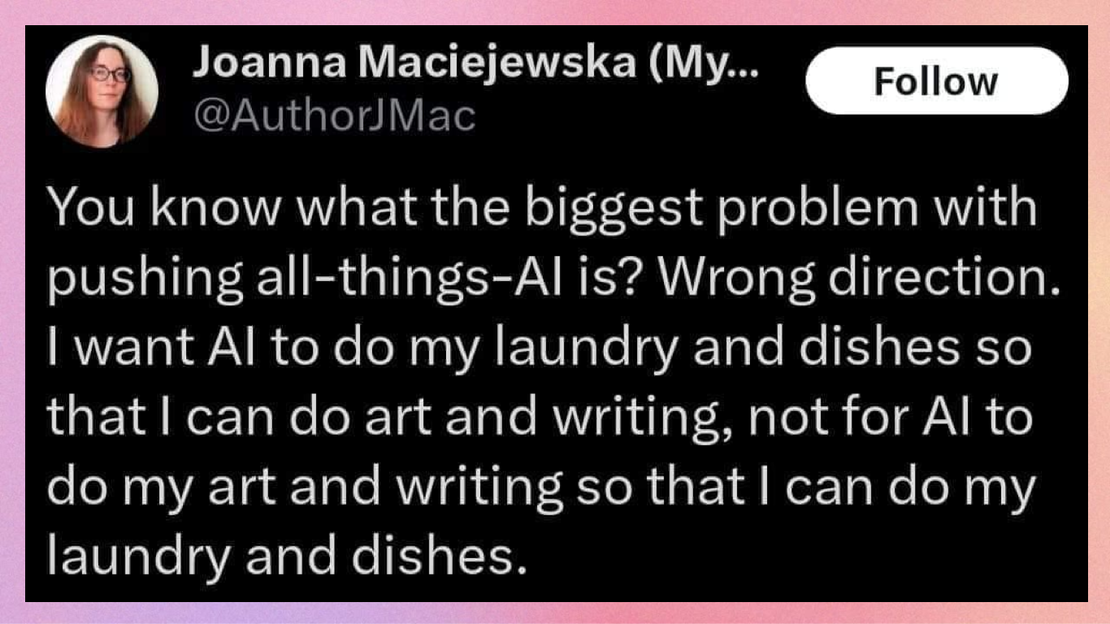

Conclusion

Look. I know you’re using generative AI. After all, why would you not? Large Language Models can save us time and make us more creative. I confess: even I used ChatGPT to make some of the paragraphs above easier to read. So I’m not trying to discourage you from brainstorming or writing with the help of a machine. I’m just telling you to be careful. If you rely on AI as a tool for creativity, it’s essential to treat it as a collaborator rather than a shortcut. Don’t immediately ask for the solution to a problem if your intention is to learn. Don’t simply copy-paste its texts and only change a word or two. Always remain wary of biases or errors. If we can all do that, AI will pose a threat to no one. Instead, it will fulfill its promise as a tool to — how would ChatGPT put it? — become a better version of ourselves.